Harmful Content on Social Media: CIPESA and AfricTivistes Call on Stakeholders

An workshop was held in Dakar this Thursday on platform responsibility and content moderation. Faced with the harmful effects of the increasing internet users, social media ecosystem actors explored the challenges of moderating digital content. Recommendations aimed at promoting digital freedoms in Africa, particularly in Senegal, were drawn.

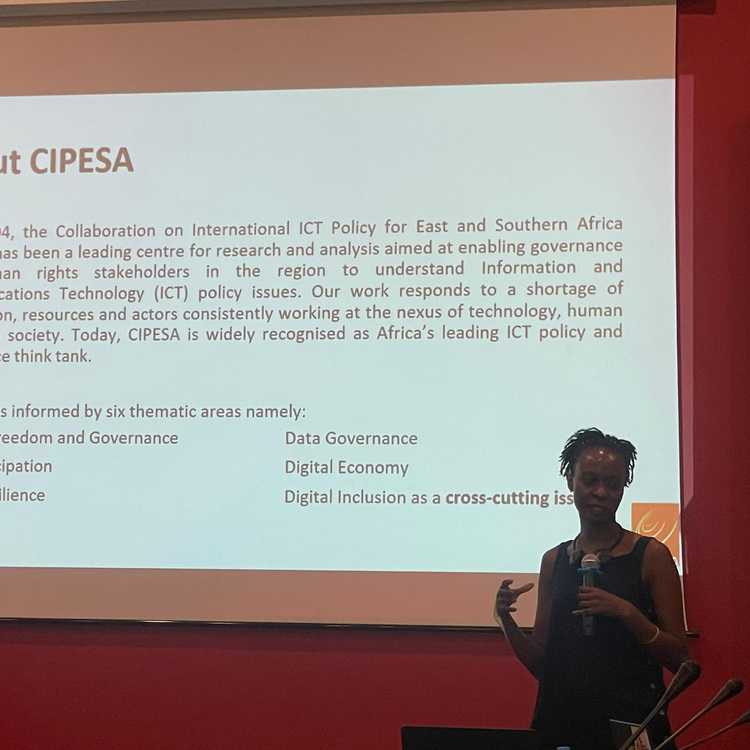

Organized by The Collaboration on International ICT Policy for East and Southern Africa (CIPESA) in collaboration with AfricTivistes, the meeting provided a critical look at current issues. These include data protection, online censorship, internet restrictions, surveillance, as well as violent or misleading online content. The latter remains a major challenge on the continent.

According to CIPESA representative Ashnah Kalemera, the high internet penetration rate nowadays is fueling the rise of hate speech, violent rhetoric, and fake news. In response to these trends observed on social media platforms, she advocates for reporting as a solution. Ashnah Kalemera stated: “Beyond the moderation carried out by governments and platforms themselves, a multi-stakeholder approach is needed to eradicate harmful content from technological platforms. If individuals do not take responsibility, misinformation spreads and can have regrettable consequences,” she warned.

Passionate discussions revealed that self-moderation by users regarding harmful, illegal, or offensive content can be challenging. Some participants highlighted the subjective nature of human reporting in certain cases. “Users may flag content as inappropriate or dangerous solely based on their opinions,” noted Ababacar Diop from the Commission for Personal Data Protection.

Moreover, it was noted that platforms can be affected when public figures post inappropriate content and decisions to censor are made. Similarly, moderation tasks can be complex when these public figures are targeted with attacks on social media.

Therefore, Serge Koué, a blogger and IT expert, emphasized the importance of consensus on moderation policies among platforms, users, and governments with differing interests and parameters.

Both CIPESA and AfricTivistes place a strong emphasis on promoting responsible digital use particularly on social media.

Maateuw Mbaye, Project Manager at Article 19, who conducted a case study on freedom of expression and content moderation in Senegal, advocated for collaboration between platforms, users, and governments to establish common standards. He believes that responsibilities are shared when it comes to moderation. To this end, he formulated a list of recommendations, including the need to clarify terms of use, ensure transparency in moderation processes, and establish recourse mechanisms. Special attention was given to the individual responsibility of users in combating misinformation.

The event brought together various stakeholders such as journalists, influencers, representatives from organizations like the Commission des Données Personnelles (CDP), and platforms like Meta. Olivia Tchamba, Public Policy Manager at Meta, emphasized her company’s commitment to finding a balance between giving users a voice and ensuring the primacy of reliable information. She advocated for self-censorship and responsible use by users. She stated that Meta adopts a five-level approach to ensure content security and moderation. Community standards evolve to find this balance. Meta’s content moderation policy is based on global presence, advanced moderation tools, common standards, and access to internal collaborators.

“Our goal is to enable everyone to participate in a connected community. With 3.88 billion users worldwide, including 204 million in Sub-Saharan Africa, at Meta, it is essential to ensure a balance between security, privacy protection, and moderation.”

“With support for around fifty languages, including Wolof, Meta is committed to promoting responsible use of its platforms without compromising the truth. The goal is to strike a balance between user autonomy and information accuracy.”

Who should report in the end?

Apart from subjective user moderation, platforms are not faultless either, given abuses, vague terms of use, lack of transparency in moderation processes, and recourse mechanisms, among other issues.

“Humans may report simply because they dislike the content. This can be abusive and subjective,” stated Pape Ismaïla Dieng, communication and advocacy officer at AfricTivistes et moderator of the session. “This is why moderation is important because algorithms do not understand all languages.”

The discussions also highlighted cultural and political context differences between countries that can skew algorithm judgment or even that of those reporting content without understanding the context.

Participants advocated for collaboration among stakeholders to establish common standards while integrating awareness into user approaches to avoid slipping into freedom of expression restrictions under the guise of moderation.

Moreover, particular attention was given to individual user responsibility in combating misinformation.

Personal Data Collection: Where is the Control?

Regarding the protection of personal data collected by platforms, various actors discussed high risks of violating personal data confidentiality.

To ensure user privacy respect, governments’ responsibility was questioned, as well as that of social media platforms to respect privacy, secure and manage personal information properly. According to Ababacar Diop, this helps create trust between platforms and users.

In Senegal and Guinea Republic, countries where internet interruptions are frequent lately, internet users are forced to use VPNs exposing their personal data.

They also questioned the use of data collected during operations such as sponsorships in Senegal.

In conclusion, participants came away from the workshop convinced that content moderation has become a crucial issue in our digital age, where misinformation and dangerous discourse are proliferating in exchanges.

The need to adopt innovative approaches to combating misinformation while respecting user privacy is becoming imperative.

The challenges are many, but by consolidating the efforts already begun in content moderation, online platforms in collaboration with authorities and citizens can help create a more reliable, transparent environment that respects individual rights.

![[Togo] AfricTivistes protests against Togo’s constitutional change!](/static/a82ede1de723a3762a93195203dc0156/fce2a/1.png)

![[Mali] AfricTivistes calls on the junta to respect fundamental rights!](/static/bd7efaf062b64313cb28d63c1830b96a/9e635/WhatsApp-Image-2024-04-17-at-13.58.19.jpg)

![[Congo]Africtivistes demands the immediate and unconditional release of all activists](/static/503b1823ba8a254ad4ebd649fcf7d38c/fce2a/aliousane.png)

![[Senegal] AfricTivistes and five other organisations demand light be shed on assaullt of journalist Maimouna Ndour Faye](/static/15e44ab239406e91d8f4a5d451f5d71c/9e635/WhatsApp-Image-2024-03-02-at-14.41.24-2.jpg)